Videos

See AI2's full collection of videos on our YouTube channel.Viewing 1-10 of 252 videos

Robot Learning by Understanding Egocentric Videos

April 22, 2024 | Saurabh GuptaAbstract: True gains of machine learning in AI sub-fields such as computer vision and natural language processing have come about from the use of large-scale diverse datasets for learning. In this talk, I will discuss how we can leverage large-scale diverse data in the form of egocentric videos (first-person…

Project Sidewalk: Crowd+AI Techniques to Map and Assess Every Sidewalk in the World

April 18, 2024 | Jon FroehlichAbstract: Sidewalks are critical to human mobility, local commerce, and environmentally sustainable cities. In this interactive talk, we will showcase our 12+ years of research in developing scalable techniques to map, assess, and visualize sidewalks throughout the world. See https://projectsidewalk.org for more…

Does Generative AI Infringe Copyright?

April 10, 2024 | James GrimmelmanAbstract: Discussion of the copyright-law aspects of generative AI, based on ["Talkin’ ’Bout AI Generation: Copyright and the Generative-AI Supply Chain"](https://james.grimmelmann.net/files/articles/talkin-bout-ai-generation.pdf) . Here's a blog about the paper he co-authored: https://genlaw.org/explainers…

Figuring out how the world works: causality in a world full of real people

February 28, 2024 | Konrad KordingAbstract: Causality is key to many branches of science, engineering, and the alignment of AI systems. I will start by highlighting the difficulties of causal inference in the real world, and build some intuition about why in the real world causality is difficult while it seems easy in our mind. I will continue by…

Machine-Checked Proofs, and the Rise of Formal Methods in Mathematics

February 16, 2024 | Leonardo de MouraAbstract: The domains of mathematics and software engineering witness a rapid increase in complexity. As generative artificial intelligence emerges as a potential force in mathematical exploration, a pressing imperative arises: ensuring the correctness of machine-generated proofs and software constructs. The Lean…

Beyond Test Accuracies for Studying Deep Neural Networks

February 9, 2024 | Kyunghyun ChoAbstract: Already in 2015, Leon Bottou discussed the prevalence and end of the training/test experimental paradigm in machine learning. The machine learning community has however continued to stick to this paradigm until now (2023), relying almost entirely and exclusively on the test-set accuracy, which is a…

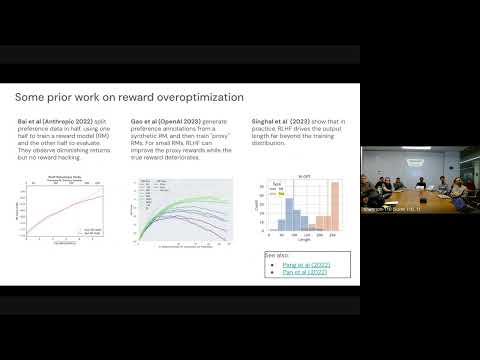

Helping or Herding? Reward Model Ensembles Mitigate but do not Eliminate Reward Hacking

February 2, 2024 | Jonathan BerantAbstract: Reward models are commonly used in the process of large language model alignment but are prone to reward hacking, where the true reward diverges from the estimated reward as the language model drifts out-of-distribution. In this talk, I will discuss a recent study on the use of reward ensembles to…

Integrated Systems for Computational Scientific Discovery

January 23, 2024 | Pat LangleyAbstract: In this talk, I challenge the AI research community to develop and evaluate integrated discovery systems. There has been a steady stream of AI work on scientific discovery since the 1970s, much of it leading to published results in fields like astronomy, biology, chemistry, and physics. However, most…

Language AI for RNA Virus and RNA Vaccine

November 29, 2023 | Liang HuangAbstract: Linguistics and biology are two sides of the same coin. This talk features several highly unexpected connections between them which yield efficient algorithms with substantial biological impacts. One such connection (Nature, 2023) is between messenger RNA (mRNA) vaccines and formal language theory…

OpenWebMath: An Open Dataset of High-Quality Mathematical Web Text

November 28, 2023 | Keiran PasterAbstract: There is growing evidence that pretraining on high quality, carefully thought-out tokens such as code or mathematics plays an important role in improving the reasoning abilities of large language models. For example, Minerva, a PaLM model finetuned on billions of tokens of mathematical documents from…