Videos

See AI2's full collection of videos on our YouTube channel.Viewing 51-60 of 250 videos

Applied AI in High-Expertise Settings, or Curation as Programming

June 29, 2022 | Bill HoweThe success of large AI models in challenging (yet conceptually simple) perception and comprehension tasks (e.g., generating images from a text description) is motivating new applications of these methods in areas previously considered to require human expertise. For example, deep learning has shown promise in…

Machines Making Moral Decisions

June 22, 2022 | Kurt GrayAbstract: Humans are increasingly working with AI-powered algorithms, sharing the road with autonomous vehicles, sharing hospital wards with autonomous surgery robots, and making joint decisions with autonomous algorithms. As we adapt to the increasing presence of AIs playing significant roles in our…

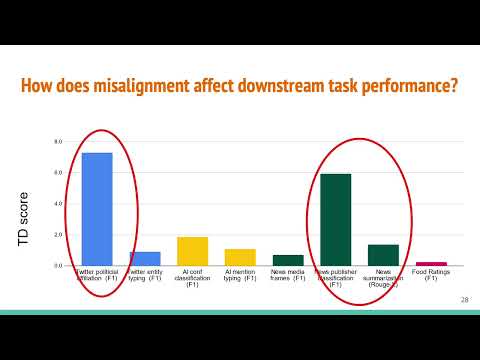

Time Waits for No One! Analysis and Challenges of Temporal Misalignment

June 14, 2022 | Kelvin LuuWhen an NLP model is trained on text data from one time period and tested or deployed on data from another, the resulting temporal misalignment can degrade end-task performance. In this work, we establish a suite of eight diverse tasks across different domains (social media, science papers, news, and reviews) and…

Prompt Waywardness: The Curious Case of Discretized Interpretation of Continuous Prompts

June 10, 2022 | Daniel KhashabiFine-tuning continuous prompts for target tasks has recently emerged as a compact alternative to full model fine-tuning. Motivated by these promising results, we investigate the feasibility of extracting a discrete (textual) interpretation of continuous prompts that is faithful to the problem they solve. In…

DREAM: Improving Situational QA by First Elaborating the Situation

June 7, 2022 | Yuling GuWhen people answer questions about a specific situation, e.g., "I cheated on my mid-term exam last week. Was that wrong?", cognitive science suggests that they form a mental picture of that situation before answering. While we do not know how language models (LMs) answer such questions, we conjecture that they…

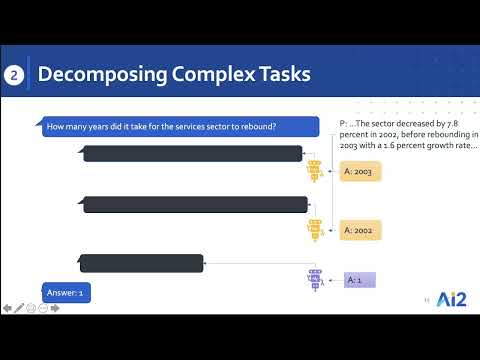

Text Modular Networks: Learning to Decompose Tasks in the Language of Existing Models

June 6, 2022 | Tushar KhotNAACL '21 Presentation of our paper: https://api.semanticscholar.org/CorpusID:221448158 We propose a general framework called Text Modular Networks(TMNs) for building interpretable systems that learn to solve complex tasks by decomposing them into simpler ones solvable by existing models. To ensure solvability…

Reframing Instructional Prompts to GPTk’s Language

June 5, 2022 | Swaroop MishraThis video summarizes the paper "https://aclanthology.org/2022.findings-acl.50/". Abstract: What kinds of instructional prompts are easier to follow for Language Models (LMs)? We study this question by conducting extensive empirical analysis that shed light on important features of successful instructional…

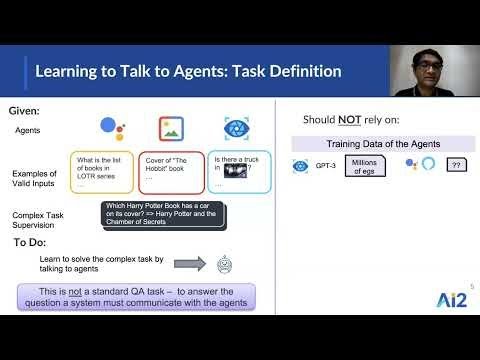

Hey AI, Can You Solve Complex Tasks by Talking to Agents?

May 22, 2022 | Tushar KhotACL '22 Talk for paper: https://api.semanticscholar.org/CorpusID:248666080 Training giant models from scratch for each complex task is resource- and data-inefficient. To help develop models that can leverage existing systems, we propose a new challenge: Learning to solve complex tasks by communicating with…

Why Natural Language is the Right Vehicle for Complex Reasoning

May 11, 2022 | Greg DurrettAbstract: Despite their widespread success, end-to-end transformer models consistently fall short in settings involving complex reasoning. Transformers trained on question answering (QA) tasks that seemingly require multiple steps of reasoning often achieve high performance by taking "reasoning shortcuts." We…Data Leverage: A Framework for Empowering the Public and Mitigating Harms of AI

May 10, 2022 | Nicholas VincentMany powerful computing technologies rely on both implicit and explicit data contributions from the public. This dependency suggests a potential source of leverage for the public in its relationship with technology companies: by reducing, stopping, redirecting, or otherwise manipulating data contributions, a…