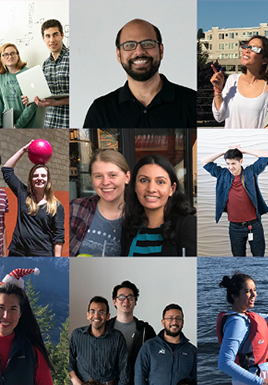

Why AI2?

We're a team of engineers and researchers with diverse backgrounds collaborating to solve some of the toughest problems in AI research.

Work With Us

AI2 is a non-profit focused on contributing to AI research and engineering efforts intended to benefit the common good. Join us to tackle an extraordinary set of challenges.

View open jobs

Diversity, Equity, & Inclusion

We are committed to fostering a diverse, inclusive environment within our institute, and to encourage these values in the wider research community.

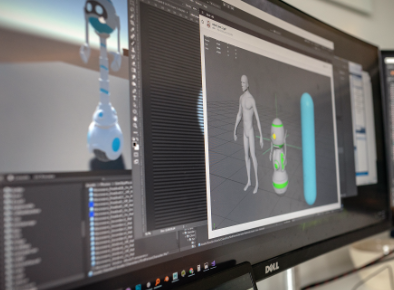

See howNatural Language Processing

Machine reasoning, common sense for AI, and language modeling

AI for the Environment

Applied AI for climate modeling and wildlife protection

EarthRanger

EarthRanger is a real-time software solution that aids protected area managers, ecologists, and wildlife biologists in making more informed operational decisions for wildlife conservation.

Learn more

Skylight

Skylight helps reduce illegal, unreported, and unregulated (IUU) fishing through technology that provides transparency and actionable intelligence for maritime enforcement.

Learn more

Climate Modeling

Our goal is to improve the world’s understanding of climate change, its effects, and what can be done now. Better data and technologies will inform how we mitigate and adapt to global impacts, such as sea level rise, community destruction, and biodiversity loss.

Learn more

Wildlands

We apply machine learning to support wildland fire management and research workflows, focusing our work on ground-level fuels that are not measurable with current satellite technology.

Learn more