Question Understanding

Recent Papers

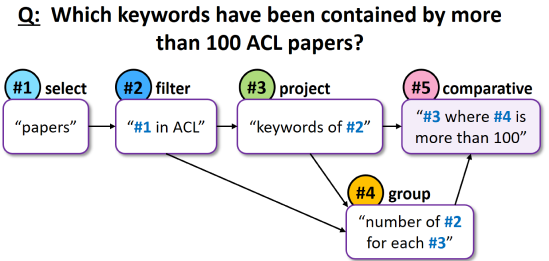

Break It Down: A Question Understanding Benchmark

Tomer Wolfson, Mor Geva, Ankit Gupta, Matt Gardner, Yoav Goldberg, Daniel Deutch, Jonathan BerantTACL • 2020 Understanding natural language questions entails the ability to break down a question into the requisite steps for computing its answer. In this work, we introduce a Question Decomposition Meaning Representation (QDMR) for questions. QDMR constitutes the…Injecting Numerical Reasoning Skills into Language Models

Mor Geva, Ankit Gupta, Jonathan BerantACL • 2020 Large pre-trained language models (LMs) are known to encode substantial amounts of linguistic information. However, high-level reasoning skills, such as numerical reasoning, are difficult to learn from a language-modeling objective only. Consequently…Obtaining Faithful Interpretations from Compositional Neural Networks

Sanjay Subramanian, Ben Bogin, Nitish Gupta, Tomer Wolfson, Sameer Singh, Jonathan Berant, Matt Gardner ACL • 2020 Neural module networks (NMNs) are a popular approach for modeling compositionality: they achieve high accuracy when applied to problems in language and vision, while reflecting the compositional structure of the problem in the network architecture. However…CommonsenseQA: A Question Answering Challenge Targeting Commonsense Knowledge

Alon Talmor, Jonathan Herzig, Nicholas Lourie, Jonathan BerantNAACL • 2019 When answering a question, people often draw upon their rich world knowledge in addition to the particular context. Recent work has focused primarily on answering questions given some relevant document or context, and required very little general background…The Web as a Knowledge-base for Answering Complex Questions

Alon Talmor, Jonathan BerantNAACL • 2018 Answering complex questions is a time-consuming activity for humans that requires reasoning and integration of information. Recent work on reading comprehension made headway in answering simple questions, but tackling complex questions is still an ongoing…

Recent Datasets

StrategyQA

2,780 implicit multi-hop reasoning questions

StrategyQA is a question-answering benchmark focusing on open-domain questions where the required reasoning steps are implicit in the question and should be inferred using a strategy. StrategyQA includes 2,780 examples, each consisting of a strategy question, its decomposition, and evidence paragraphs.

Break

83,978 examples sampled from 10 question answering datasets over text, images and databases.

Break is a human annotated dataset of natural language questions and their Question Decomposition Meaning Representations (QDMRs). Break consists of 83,978 examples sampled from 10 question answering datasets over text, images and databases.

CommonsenseQA

12,102 multiple-choice questions with one correct answer and four distractor answers

CommonsenseQA is a new multiple-choice question answering dataset that requires different types of commonsense knowledge to predict the correct answers. It contains 12,102 questions with one correct answer and four distractor answers.

ComplexWebQuestions

34,689 complex questions and their answers, web snippets, and SPARQL query

ComplexWebQuestions is a dataset for answering complex questions that require reasoning over multiple web snippets. It contains a large set of complex questions in natural language, and can be used in multiple ways: 1) By interacting with a search engine, which is the focus of our paper (Talmor and Berant, 2018); 2) As a reading comprehension task: we release 12,725,989 web snippets that are relevant for the questions, and were collected during the development of our model; 3) As a semantic parsing task: each question is paired with a SPARQL query that can be executed against Freebase to retrieve the answer.

Team

Tomer WolfsonIntern

Tomer WolfsonIntern