Videos

See AI2's full collection of videos on our YouTube channel.Viewing 101-110 of 257 videos

Heroes of NLP: Oren Etzioni

October 13, 2020 | DeepLearning.AIHeroes of NLP is a video interview series featuring Andrew Ng, the founder of DeepLearning.AI, in conversation with thought leaders in NLP. Watch Andrew lead an enlightening discourse around how these industry and academic experts started in AI, their previous and current research projects, how their…

Is GPT-3 Intelligent? A Directors' Conversation with Oren Etzioni

October 1, 2020 | Stanford HAIIn this latest Directors’ Conversation, HAI Denning Family Co-director John Etchemendy’s guest is Oren Etzioni, Allen Institute for Artificial Intelligence CEO, company founder, and professor of computer science. Here the two discuss language prediction model GPT-3, a better approach to an AI Turing test, and the…

Choosing the Right Statistical Approach to Assess Hypotheses – ACL 2020

July 13, 2020 | Daniel KhashabiA survey of hypotheses assessing tools in NLP and their comparison. Further details can be found in the paper 'Not All Claims are Created Equal: Choosing the Right Approach to Assess Your Hypotheses'. https://www.semanticscholar.org/paper/Not-All-Claims-are-Created-Equal%3A-Choosing-the-to-Azer-Khashabi…

Syntactic Search by Example – ACL 2020

July 6, 2020 | Micah SchlainMicah Sclain discusses the work on syntactic search happening at AI2 Israel. Check out our system: https://allenai.github.io/spike/

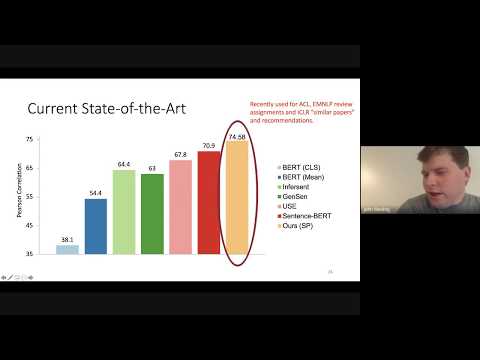

Learning and Applications of Paraphrastic Representations for Natural Language

June 18, 2020 | John WietingRepresentation learning has had a tremendous impact in machine learning and natural language processing (NLP), especially in recent years. Learned representations provide useful features needed for downstream tasks, allowing models to incorporate knowledge from billions of tokens of text. The result is better…

Neuro-symbolic Learning Algorithms for Automated Reasoning

April 30, 2020 | Forough ArabshahiHumans possess impressive problem solving and reasoning capabilities, be it mathematical, logical or commonsense reasoning. Computer scientists have long had the dream of building machines with similar reasoning and problem solving abilities as humans. Currently, there are three main challenges in realizing this…

From 'F' to 'A' on the N.Y. Regents Science Exams: An Overview of the Aristo Project

March 27, 2020 | Peter ClarkAI has achieved remarkable mastery over games such as Chess, Go, and Poker, and even Jeopardy!, but the rich variety of standardized exams has remained a landmark challenge. Even as recently as 2016, the best AI system could achieve merely 59.3% on an 8th Grade science exam. This talk reports success on the…

Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer

January 6, 2020 | Colin RaffelTransfer learning, where a model is first pre-trained on a data-rich task before being fine-tuned on a downstream task, has emerged as a powerful technique in natural language processing (NLP). The effectiveness of transfer learning has given rise to a diversity of approaches, methodology, and practice. In this…

Towards AI Complete Question Answering: Combining Text-based, Unanswerable and World Knowledge Questions

December 11, 2019 | Anna RogersThe recent explosion in question answering research produced a wealth of both reading comprehension and commonsense reasoning datasets. Combining them presents a different kind of challenge: deciding not simply whether information is present in the text, but also whether a confident guess could be made for the…

Learning Dynamics of LSTM Language Models

November 20, 2019 | Naomi SaphraResearch has shown that neural models implicitly encode linguistic features, but there has been little work exploring how these encodings arise as the models are trained. I will be presenting work on the learning dynamics of neural language models from a variety of angles. Using Singular Vector Canonical…