Videos

See AI2's full collection of videos on our YouTube channel.Viewing 11-20 of 261 videos

Training Human-AI Teams

March 18, 2024 | Hussein MozannarAbstract: AI systems, including large language models (LLMs), are augmenting the capabilities of humans in settings such as healthcare and programming. I first showcase preliminary evidence of the productivity gains of LLMs in programming tasks. To understand opportunities for model improvements, I developed a…

Making Health Knowledge Accessible Through Personalized Language Processing

March 11, 2024 | Yue GuoAbstract: The pandemic exposed the difficulties the general public faces when attempting to use scientific information to guide their health-related decisions. Though widely available in scientific papers, the information required to guide these decisions is often not accessible: medical jargon, scientific…

Figuring out how the world works: causality in a world full of real people

February 28, 2024 | Konrad KordingAbstract: Causality is key to many branches of science, engineering, and the alignment of AI systems. I will start by highlighting the difficulties of causal inference in the real world, and build some intuition about why in the real world causality is difficult while it seems easy in our mind. I will continue by…

Machine-Checked Proofs, and the Rise of Formal Methods in Mathematics

February 16, 2024 | Leonardo de MouraAbstract: The domains of mathematics and software engineering witness a rapid increase in complexity. As generative artificial intelligence emerges as a potential force in mathematical exploration, a pressing imperative arises: ensuring the correctness of machine-generated proofs and software constructs. The Lean…

Beyond Test Accuracies for Studying Deep Neural Networks

February 9, 2024 | Kyunghyun ChoAbstract: Already in 2015, Leon Bottou discussed the prevalence and end of the training/test experimental paradigm in machine learning. The machine learning community has however continued to stick to this paradigm until now (2023), relying almost entirely and exclusively on the test-set accuracy, which is a…

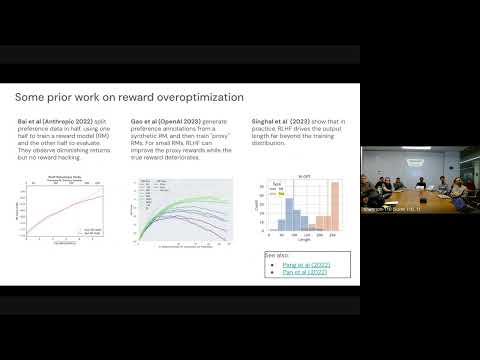

Helping or Herding? Reward Model Ensembles Mitigate but do not Eliminate Reward Hacking

February 2, 2024 | Jonathan BerantAbstract: Reward models are commonly used in the process of large language model alignment but are prone to reward hacking, where the true reward diverges from the estimated reward as the language model drifts out-of-distribution. In this talk, I will discuss a recent study on the use of reward ensembles to…

Integrated Systems for Computational Scientific Discovery

January 23, 2024 | Pat LangleyAbstract: In this talk, I challenge the AI research community to develop and evaluate integrated discovery systems. There has been a steady stream of AI work on scientific discovery since the 1970s, much of it leading to published results in fields like astronomy, biology, chemistry, and physics. However, most…

Language AI for RNA Virus and RNA Vaccine

November 29, 2023 | Liang HuangAbstract: Linguistics and biology are two sides of the same coin. This talk features several highly unexpected connections between them which yield efficient algorithms with substantial biological impacts. One such connection (Nature, 2023) is between messenger RNA (mRNA) vaccines and formal language theory…

OpenWebMath: An Open Dataset of High-Quality Mathematical Web Text

November 28, 2023 | Keiran PasterAbstract: There is growing evidence that pretraining on high quality, carefully thought-out tokens such as code or mathematics plays an important role in improving the reasoning abilities of large language models. For example, Minerva, a PaLM model finetuned on billions of tokens of mathematical documents from…

The Worlds I See: Curiosity, Exploration and Discovery at the Dawn of AI

November 13, 2023 | Dr. Fei-Fei LiDr. Fei-Fei Li joins us for a fireside chat with Ali. She discusses her latest book, The Worlds I See: Curiosity, Exploration, and Discovery at the Dawn of AI. Bio: Dr. Fei-Fei Li is the inaugural Sequoia Professor in the Computer Science Department at Stanford University, and Co-Director of Stanford’s Human…